Movies used to portray dystopias as spooky scenarios with monsters, ghosts, or spirits terrorizing people and disrupting their daily lives. Today, more and more documentaries and films show a different type of scary, a world where there is little or nonexistent privacy. The last few months were booming with data privacy discussions, whether the topics were related to Apple’s new announcement regarding users’ pictures and messages, a bug that allowed “zero-click” installation of spyware on its products, or Venice’s new plan to track people, dystopian futures come closer and closer to reality.

Scanning pictures and messages

One of the most recent controversial discussions regards Apple’s new update, the iOS 15. Its new feature is designed to help stop the spread of child sexual abuse material (CSAM) online. Although it sounds promising and comes from good intentions, the system was part of a heated debate in the online world throughout August and September of 2021. Multiple companies, such as Facebook, Microsoft, and Google already have their own method for detecting CSAM [1], and even Apple has been scanning iCloud Mail since 2019, but the outrage began when the tech giant announced that it would begin to scan iCloud Photos and iMessage, its texting platform, as well [2].

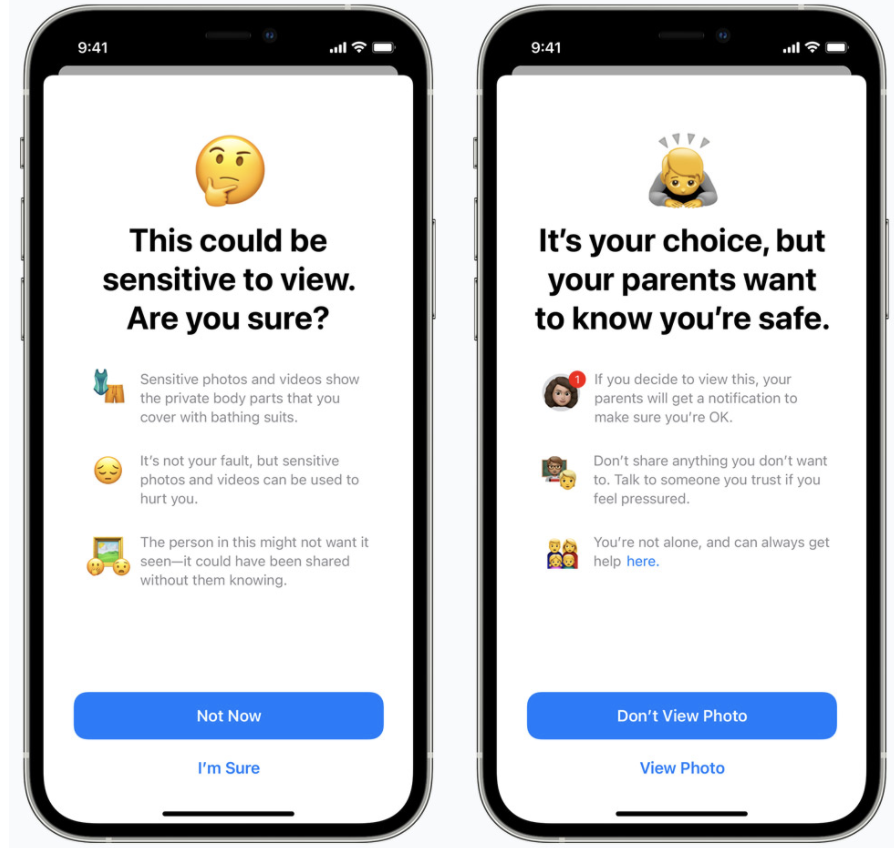

The less (although still) controversial topic related to the new iOS update is the feature intended to protect children from explicit sexual content shared over iMessage. According to Apple, “when receiving this type of content, the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo. As an additional precaution, the child can also be told that to make sure they are safe, their parents will get a message if they do view it” [3]. Although it is an opt-in feature, privacy advocates still worry about how those images will be scanned and analyzed, since they will be screened individually, unlike the second initiative taken by the company.

The other component that was discussed was the examination of users’ phones in order to look for CSAM. Different from the previous change, this is more privacy-geared, as it “performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations” [3] instead of individually analyzing pictures. Apple uses a technology that determines if there is a match between the image’s “fingerprint” and its database without revealing the result of the pairing, which is encoded into a voucher. This along with encrypted data about the picture is uploaded to iCloud Photos once the user uploads their photo, and the content of the voucher is only interpreted by Apple if the person’s account crosses a threshold of known CSAM content. Once the threshold is reached, Apple “manually reviews each report to confirm there is a match, disables the user’s account, and sends a report to NCMEC.” In addition, the threshold is chosen such that there is less than one in a trillion chance of Apple incorrectly disabling an account [3]. This leads to an important question: why are people so aggrieved by this update?

According to CNBC [4] and the New York Times [5], privacy advocates opposed the system because of what it could mean to data privacy and the potential damage this could bring. Many are concerned that the new feature will be backdoor access to encrypted data, both from governments and third parties, and are worried about the shift in Apple’s core values. Since its beginning, the tech company has always been a big advocate for privacy and tried not to surrender to governments’ requests as much as possible, but this move represents a break from this mindset, as Apple opens the door to iMessage and iCloud Photos screening.

A week after it announced its new plan and received negative reviews, Apple declared that it would only search pictures that were matched with databases from at least two organizations that are not under the same jurisdictional authority [1], but privacy advocates are still worried “that Apple will be pressed into expanding its CSAM screening to look for other content at the insistence of governments where Apple sells its devices” [1]. With this in mind, more than 90 privacy groups worldwide complained to the company and thousands of people signed a petition against the update [2]. The tech company eventually delayed the release of both of these tools, taking time to test the system more before making it available to the public.

Unnoticeable bug

Another Apple incident made the headlines this past September, as one of people’s worst fears became true. Its security team scrambled to release an emergency update as it was announced that a spyware company was able to access Apple’s products without alerting its users. The highly invasive virus, Pegasus, allows the hacker to do everything a consumer can do, such as “turn on a user’s camera and microphone, record messages, texts, emails, calls — even those sent via encrypted messaging and phone apps like Signal […]” [6]. As if this wasn’t bad enough, the New York Times stated that 1.65 billion Apple products were exposed to the spyware since at least March 2020 [6].

Pegasus’ zero-click capability ensures that users do not notice when they are being hacked, unlike viruses that spread through embedded links via text or email, meaning that anyone could have their Apple product compromised and not even know! It was first believed that the spyware had been passed on through a fault in the CoreGraphics system [7], used to load GIFs, and Apple even declared that “processing a maliciously crafted PDF may lead to arbitrary code execution” and that it “is aware of a report that this issue may have been actively exploited” later on [8]. Less than a week after this was discovered by Citizen Lab, Apple released the iOS 14.8 update and urged all of its customers to install it.

Real life “Big Brother”

Another kind of dystopian future is taking place in Venice, Italy. Its citizens had long hoped for a less touristic town, where prices were reasonable and places weren’t as crowded, and they finally got that during the global pandemic last year, but as people begin to go back to traveling, the Venetian mayor came up with a horror-movie like solution to the overcrowding problem. In addition to doubling the city’s security cameras, Venice partnered with TIM, an Italian phone company, to gather data about its tourists and citizens [9]. “The system not only counts visitors in the vicinity of cameras posted around the city, but it also […] crunches who they are and where they come from”, according to CNN [10]. Although there are no apparent data privacy infringements, since no personal data is collected, some citizens and advocates worry about “Venice turning into ‘an open-air Big Brother,’” while others see it as the lesser of two evils when compared to the overcrowding of the city [9].

While Venices’ citizens and tourists can now be tracked and have their data collected by local authorities, billions of people worldwide were exposed to a “zero-click” bug through a fault in Apple’s products, and will soon have their messages and photos scanned by the company. While some of these changes have good foundations and ideals, such as the attempt to stop CSAM distribution or decrease the number of visitors in Venice, they might contribute to a data privacy leak that could expose many. Others, such as Pegasus’ virus, were so alarming that they could have been the plot of a horror movie. Either way, privacy as we know it is constantly changing; will it bring a utopian or dystopian future?

Bibliography

[3] “Expanded Protections for Children,” Apple, Sep. 03, 2021. https://www.apple.com/child-safety/.