On March 21, 2022, a Boeing 737-800 operated by China Eastern Airlines crashed into a mountainous region in southeastern China after the plane went into a nosedive from about 29,000 feet (8,800 meters). Investigations are currently undertaken to look for what caused the crash with 132 people aboard [1].

In aviation, flight safety takes a proactive approach in trying to prevent accidents. Daily news concerning tragedies like that of Boeing 737-800 forces investigators to look more deeply into how human-centered designs could save lives. But before looking at the different design systems and how they impact the safety of people, one must first understand the history of accidents.

History of Accidents

Most corrections to existing systems and mechanics in aviation have been improved following severe accidents. This “trial and response” life cycle begins with ideation of innovation, an accident, and finally a correction. Statistically, human error plays the biggest role when compared to other causes such as environmental conditions (air traffic, meteorological conditions, etc).

Investigators begin to analyze “why” pilots had so many errors given that human error has played a pivotal role in accidents. Between the 1940s and 1950s, the main cause of accidents was classified as “loss of control” which includes situations where pilots lost airplane control such as reaching and exceeding the structural limits, experiences in unusual attitudes that jeopardize the flight progress, and so forth [2]. The root cause of the loss of control was often due to fatigue, excessive workload, to distraction and therefore was identified under the area of “human performances and limitations.”

To fix this problem, solutions that provided more support systems and technological aids were introduced. Auto-pilot, auto–throttle, and flight director alleviate the workload for pilots where too much attention was needed to carry on the task and direct the pilot’s focus to other things that require more attentive operations. Until the 1970s, the influence of these innovations was impacted as the rate of accidents dropped.

However, the main cause of the accident shifted from “loss of control” to “controlled flight into terrain,” where a problem in the human interaction onboard resulted in deadly accidents. To remedy poor decision making, a loss in situational awareness, or conflict in progress between pilots, courses of Crew Resource Management (CRM) were implemented in airlines to better the interaction between pilots and the entire crew.

In the 1990s, the main cause of accidents shifted back to “loss of control” in a different sense in that pilots have too many technological aids that hinder the engineering approach to safety. In other words, the automation that was intended to help pilots were actually hindering them. Regardless of the consequences of the deadly accidents, it is crucial to understand the cause of them. Through the many case studies of air mishaps, it is inevitable how big of a role human-centered design plays in giving pilots a seamless experience.

Case Studies

To illustrate the relationship between pilots and technology, let us investigate two case studies that demonstrate the unpredictability of support instruments in the real operational context and the misuse of systems by pilots in a poorly designed environment.

The first case involved Boeing’s Maneuvering Characteristics Augmentation System (MCAS) redesigned on their 737 Max Plane operated by Lion Air which crashed after the failure of the sensors. In their design to reduce fuel consumption by 30%, Boeing’s planes moved the engines forward to change the object’s center of gravity. To counter the balance, their anti-stall system, MCAS was installed where it converted the pilot’s movement with flight controls into electrical signals that are then interpreted by the flight control computers to fly the plane automatically. Boeing had believed the system to be so harmless that even if it malfunctioned, the company did not inform pilots of its existence or include a description of it in the aircraft’s flight manuals.

In the investigation of Lion Air Flight 610, Indonesian authorities released a report that points to black box data showing pilots’ struggles in maintaining control of the plane as MCAS repeatedly pushed its nose down. In return, the pilots had to manually aim the nose higher. Between the 26 times to readjust the plane’s automated sequence that occurred every five seconds, the plane crashed because of faulty information from the sensors that forced the nose down [4]. This incident demonstrates the result of faulty human-centered design as it failed to update the system based on the user’s interaction.

A diagram to demonstrate how the MCAS system works

The second case involved an Airbus A-321 operated by Air Inter which crashed after the pilot misunderstood the descent profile because of the similarity between the flight path angle function and the vertical speed function. Because of the proximity between the two-digit figures that were displayed in the same feedback window, the pilot had selected 3.3 with the intention to descend with a vertical path even though he had selected to descend at 3300 feet per minute. This steep descent was also conducted in high terrain around the airport which did not allow the crew to recover on time.

After the tragedy, the display design was changed to prevent a misunderstanding between similar functions during descending. Moreover, the Ground Proximity Warning System (GPWS) was also installed to warn the crew in case of an intensive rate of approach to the ground. Today, the GPWS has been improved to become EGPWS and is linked to satellite indication. In doing so, this allows the apparatus to realize that the low altitude is consistent with the airport position and any objects around its surroundings. By displaying all the relevant information, pilots can be more aware of the presence of mountainous terrains that are close to the aircraft’s relative position.

Human Factor and Technology

Design has always been part of preventing and minimizing human error when it comes to aviation. In the past several decades, Boeing has initiated new design activities by looking at past operational events, operational objectives, and scientific studies that define human design requirements. Analytical methods like a mockup or a simulator evaluation are used to assess the quality of different solutions.

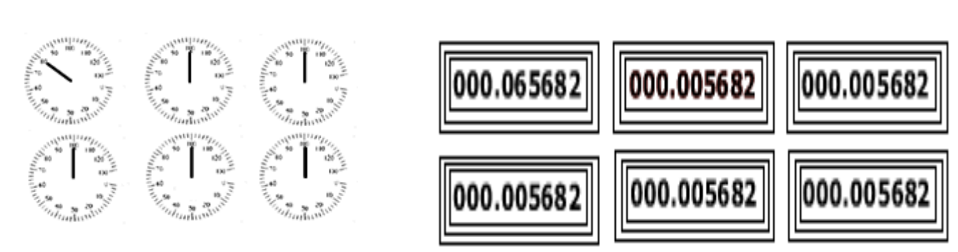

Take a look at the two different figures that simulate the display system of an aircraft. Using the Gestalt principles, a set of principles that explains how humans recognize patterns, it is easier for the eyes to spot a difference on the left-side display versus the right-side display as the human mind is more prone to noticing general configurations rather than using analytical vision. In the various displays that one must process, a pilot is more concerned with the symmetry of data rather than a precise indication. By being able to notice the difference in the indicator immediately through the irregularity of the shape, a pilot can strive with a lower workload when operating the aircraft.

The comparison between two display systems in the cockpit

Despite being in the age of advanced technology, both human factors scientists and flight crews have reported that flight crews become more confused in the state of advanced automation, such as autopilot. The Boeing Human Factors organization is thus involved to mitigate users’ surprises when operating the automation system and to ensure a more complete model of awareness is established with the flight crews. The methodology is as follows: to communicate the automated system principles, understand flight crew use of automated systems, and document skilled flight crew strategies when using the automation. With this data, Boeing uses the results to improve future interface designs for pilots and to make crew training more effective and efficient [4].

Lastly, training to familiarize with changes is important, even if the changes are small design implementations. According to the New York Times, a Southwest Airline captain discloses that pilots had to watch only a two-hour-long video before flying the new Max 8 aircraft [5]. Other airlines like United Airlines have put their training manual together that showed the drastic difference between the new model of the aircraft. Through these examples, it shows that new risk is introduced to the new equation no matter how small the design changes may be. Thus, training to become familiar with a new system is always a crucial aspect of novel designs.

Focusing on the user

Through the different technologies that are implemented in an aircraft, it is inevitable when it comes to understanding how it impacts the user experience and human safety as a whole. When disasters happen to big organizations like that of Boeing, it can leave the public feeling confused and wary of the system. It is important to remember that there is a discrepancy between the user-friendly concept that is imagined by the airplane designer and the pilot-friendly concept that is based on the experience and knowledge of the operators.

The history of airplane accidents demonstrates that despite having new solutions, new problems may arise. Hence, it is always important to iterate through old systems while keeping the pilot’s point of view in consideration. Just like any design, one must always give expertise to the end-user as it represents the utmost connection between the goal and tools in use.

Bibliography

[1] The Associated Press, “US investigators fly to China to aid in plane crash probe,” ABC News, 02-Apr-2022. [Online]. Available: https://abcnews.go.com/International/wireStory/us-investigators-fly-china-aid-plane-crash-probe-83826529.

[2] R. Aviationlab, “Human Centered Design in aviation ,” 10-Feb-2011. [Online]. Available: https://www.researchgate.net/publication/265594297_Human_centered_design_in_aviation.

[3]Code7700, “Lion Air 610,” Case Study: Lion Air 610, 02-Nov-2019. [Online]. Available: https://code7700.com/case_study_lion_air_610.htm.

[4]R. L. Satow, “Aero the role of human factors in improving aviation safety,” Boeing, 24-Jun-1999. [Online]. Available: https://www.boeing.com/commercial/aeromagazine/aero_08/human_story.html

[5] S. Synergistics, “Flight Safety Depends on Human Centered Design,” Sophic Synergistics LLC, 24-Jun-2021. [Online]. Available: https://sophicsynergistics.com/2019/03/flight-safety/.