Last updated on January 12, 2024

In September 2020, Netflix released the film “The Social Dilemma” [1]. Featuring former employees of technology companies including Facebook, Google, Twitter, and Instagram, the film “accused” online platforms, especially social media, of manipulating their users and fostering political polarization with their algorithms. It received strong reactions from audiences. In October, Facebook published its response – a statement entitled “What ‘The Social Dilemma’ Gets Wrong” [2]. While Facebook claimed in the statement that its algorithm “keeps the platform relevant and useful,” implying that the Netflix film is a “conspiracy documentary,” the whole statement seems to miss the point. The truth is, whether social media platforms such as Facebook like it or not, scholars have long been interested in the phenomenon that people can become more polarized because of social media’s feeding algorithms. This is known as a result of the “filter bubbles.”

Information cocoons, echo chambers, and filter bubbles

The idea of filter bubbles can trace back to earlier works of legal scholar and author Cass R. Sunstein. In his 2006 book “Infotopia” [3], Sunstein, aware of the developments in information technology, described the future to be a place where “it is […] simple to find, almost instantly, the judgments of ‘people like you’ about almost everything: books, movies, hotels, restaurants, vacation spots, museums, television programs, music, potential romantic partners, doctors, movie stars, and countless goods and services.” Sunstein predicted this might bring unprecedented opportunities, such as large scales of crowdsourcing, but could also cause problems. Extrapolating from findings regarding decision-making processes in smaller groups, Sunstein argued that large groups could also fall into “echo chambers,” or what he called “information cocoons,” namely where “we hear only what we choose and only what comforts and pleases us.” This could lead to serious social consequences: If everyone is only exposed to information that pleases them and confirms their opinions, without getting to know any of the opposite, they are more likely to think and insist they are right. The society would then be divided into smaller groups; people with the same opinion would stay in the same group, and none of these groups agree with each other (or in a more extreme case, know the existence of each other). The society as a whole would therefore become more segregated; fallacies would be more likely to spread and errors to be made.

What Sunstein probably did not foresee back in 2006, however, is that rather than actively choosing to only accept preferred information and stay in our information cocoons, people nowadays are often “fed” information that is likely of interest, whether we are aware of it or not. Recall the times when social media show you interesting posts from people you have not followed, or when you click on a video from TikTok’s “For You” homepage. Even Google customizes search results based on who is using it, so when you and your friend search for the same thing, the results would probably not be identical. These online platforms keep track of each user’s activities on their sites (and sometimes other sites): what they watch, who they follow, what they “like,” or what they search for [2]. Then the companies use trained algorithms, along with this data, to predict what contents a specific user might be interested in, presenting those contents to the user. This is often referred to as “algorithmic personalization” or “algorithmic filtering” [4]. The motivation for such algorithms is simple. As users we would love to efficiently find contents that are the most relevant or of interest. Algorithmic filtering facilitates this process, making users more willing to spend time on a certain platform.

Nevertheless, as one would expect, algorithmic filtering creates echo chambers for users of online platforms. These echo chambers are often referred to as “filter bubbles,” a term invented by activist Eli Pariser and used as the title of his 2011 book, “The Filer Bubble” [5]. Different from the echo chambers Sunstein conceived in the early 2000s, filter bubbles often do not involve the process of actively choosing the contents one is interested in. They are therefore likely to bring about greater problems. Individual users who are not sufficiently aware of algorithmic filtering would mistake what they receive as what the great majority sees or thinks, and consequently would hold that their view is the mainstream or “right” view. The polarizing effect of a filter bubble is likely stronger than that of other echo chambers, provided it is harder to perceive for people in it.

Are filter bubbles real?

While researchers have investigated various aspects of filter bubbles, disagreements still arise concerning the most basic questions: Do echo chambers exist online? Are filter bubbles real? In other words, do filtering algorithms really narrow the information people receive and polarize their opinions? According to Kitchens et al. [4], one of the fundamental reasons behind the disagreements is “the lack of conceptual clarity, with vague and conflicting definitions of constructs, processes, and outcomes.” Indeed, there is no agreed uniform quantitative way of measuring how diverse one’s sources of information are, or how extreme their opinion is, not to mention whether and how much algorithmic filtering contributes to the polarization of their opinions. For instance, Lawrence et al. [6] measured the blogs research participants read, concluding that “blog readers are more polarized than either non-blog-readers or consumers of various television news programs.” Meanwhile, Gentzkow and Shapiro [7] looked at the kinds of websites people visited and compared the data with offline news consumptions and face-to-face interactions. They found that “ideological segregation of online news consumption is low in absolute terms.” Whereas both studies concerned echo chambers online with respect to politics, they used different measurements and the results were hardly comparable. Differences like this lead to disagreements around echo chambers’ existence online.

A 2020 research study of three professors from McIntire School of Commerce, the University of Virginia, provided a more comprehensive view of the issue [4] [8]. Kitchens, Johnson and Gray used the browsing history of 200,000 adults in the United States from 2012 to 2016, studying their information sources and usage of Facebook, Reddit, and Twitter. The conclusion was that the impacts on people’s news consumption differed from platform to platform, with Facebook having a polarizing effect, Reddit having a moderating effect, and Twitter having no significant effect (with the notice that the data came before Twitter changed its feeding algorithm in 2016). While the debate about filter bubbles still persists, this research provides evidence that how a platform presents contents to users can noticeably affect what kinds of information users have access to, both on and off the platform. In other words, while not all online platforms create filter bubbles, algorithmic filtering does have an impact on the diversity of our information sources, which in turn can influence (or indicate) how we perceive our own opinions.

With all these said, the very statement that filter bubbles “might” exist already matters to individual internet users. People’s strong reactions to Netflix’s “The Social Dilemma” [1] show one simple fact: many of us do not want to live in a world where all information we receive is carefully selected by algorithms, hiding the full picture of truths from us. That brings us the question: what can we, as individual users, do in the face of filter bubbles?

In the face of filter bubbles

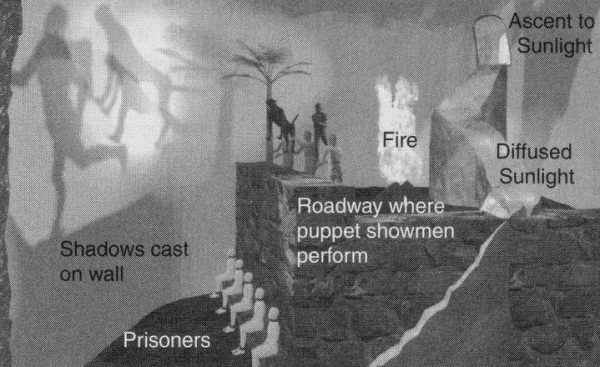

“Imagine human beings living in an underground, cavelike dwelling, with an entrance a long way up, which is both open to the light and as wide as the cave itself. They’ve been there since childhood, fixed in the same place, with their necks and legs fettered, able to see only in front of them, because their bonds prevent them from turning their heads around. Light is provided by a fire burning far above and behind them. Also behind them, but on higher ground, there is a path stretching between them and the fire. Imagine that along this path a low wall has been built, like the screen in front of puppeteers above which they show their puppets. […]Also imagine that there are people along the wall, carrying all kinds of artifacts that project above it – statues of people and other animals, made out of stone, wood, and every material.”

“The prisoners would in every way believe that the truth is nothing other than the shadows of those artifacts.”

Image Source: PHIL 201: HISTORY OF ANCIENT PHILOSOPHY, Hamilton Course Website

Above is the famous cave allegory from Plato’s “Republic” [9], written around 2,400 years ago in ancient Greece. Plato made the point that, with fire casting shadows of statues to the wall in front of the prisoners, they would perceive those shadows as the entire world, because that is all they can see. The prisoners would never realize there is a whole different, real world outside of the cave.

While Plato used the cave allegory to explain his two-world metaphysics and epistemology, an interesting parallel can be drawn between the cave and filter bubbles (the parallel should be attributed to Professor Justin Clark’s Philosophy 201, History of Ancient Western Philosophy, at Hamilton College). Users of online platforms sometimes are like the prisoners, only able to see the contents platforms present to us with their filtering algorithms. Similar to the shadows on the wall, however, these contents are merely representations of the world out there; they are not the true world itself. All one can get from these representations is, again, as Plato [9] put it, subjective assent, which we should never take as objective knowledge of the truth.

Back to the question we asked at the end of last section, what can individual users do in the face of filter bubbles? The analogous counterpart of this question would be how prisoners in the cave could get rid of the shadows and come to know the real world. The answer seems obvious: just walk out of the cave; to us this would mean going out of the filter bubbles and getting information from more varied sources. Nevertheless, Plato might warn this is not as easy as it seems. When a prisoner gets out of the cave, his eyes that are used to darkness will be hurt by the glaring sunlight, and the pain might drive him back into the cave [9]. Similarly, exposure to information and views challenging one’s own position can feel extremely uncomfortable, but actively looking for them remains one of the best ways to better understand a topic – and as Sunstein [3] pointed out in “Inforopia,” the internet has in fact made that much easier. With a click on search engines, we can readily find almost any kind of information we need, including information that challenges our own opinions.

While exposure to different types of information is essential to getting out of filter bubbles, Quattrociocchi et al. [10] might argue that alone is not enough. Their research studying U.S. and Italian Facebook users found that posts debunking people’s false beliefs might have the opposite effect of reinforcing those very beliefs. They analyzed that this could be due to the so-called confirmation bias, the tendency for people to interpret information in favor of their own opinions. The implication is that we should try to detach ourselves from our positions when approaching new information. When seeing opinions opposite to our own, do not try instantly to find fault with their arguments, but first try to see if those arguments make sense in any way, before critically analyzing them and determining whether we should actually change our opinions.

Finally, it needs to be admitted that with the extensive use of algorithmic filtering today, internet users would hardly be able to get rid of filter bubbles entirely. Sometimes we take what we see for granted, without realizing we are trapped in a filter bubble. Of course, this blog post does not aim to persuade readers to delete all social media apps, stop watching videos online, or just stay away from the internet. What matters the most is to be open-minded about different opinions, and be ready to embrace the possibility that we are wrong. Otherwise, sit back, relax, and maybe kill some time by checking what your friends are up to on your favorite social media platform.

Works Cited

- J. Orlowski, Director, The Social Dilemma. [Film]. USA: Netflix, 2020.

- Facebook Company, “What ‘The Social Dilemma’ Gets Wrong,” 10 2020. [Online]. Available: https://about.fb.com/wp-content/uploads/2020/10/What-The-Social-Dilemma-Gets-Wrong.pdf. [Accessed 23 11 2022].

- C. R. Sunstein, Infotopia, New York: Oxford University Press, 2006.

- B. Kitchens, S. L. Johnson and P. H. Gray, “Understanding Echo Chambers and Filter Bubbles: The Impact of Social Media on Diversification and Partisan Shifts in News Consumption,” MIS Quarterly, pp. 1619-1650, 1 2020.

- E. Pariser, The Filter Bubble, New York: Penguin Press, 2011.

- E. Lawrence, J. Sides and H. Farrell, “Self-Segregation or Deliberation? Blog Readership, Participation, and Polarization in American Politics,” Perspectives on Politics, Vols. Volume 8, Issue 1, pp. 141-157, 3 2010.

- M. Gentzkow and J. M. Shapiro, “Ideological Segregation Online and Offline,” NBER Working Paper Series, 2010.

- S. L. Johnson, B. Kitchens and P. Gray, “Opinion: Facebook serves as an echo chamber, especially for conservatives. Blame its algorithm.,” The Washington Post, 6 10 2020. [Online]. Available: https://www.washingtonpost.com/opinions/2020/10/26/facebook-algorithm-conservative-liberal-extremes/. [Accessed 26 11 2022].

- Plato, translated by G. Grube and revised by C. Reeve, Republic, Indianapolis, Indiana: Hackett Publishing Company, 1992, pp. 186 – 212.

- W. Quattrociocchi, A. Scala and C. R. Sunstein, “Echo Chambers on Facebook,” 2016.