Last updated on September 25, 2024

It was the end of the fall semester, 2022. I got on a Hamilton shuttle to go to the airport, from where I would then fly to Atlanta to visit a friend. I introduced myself to the guy sitting next to me, and just to kill time we started some small talks. It was a morning shuttle and we both were drowsy and mindless about the conversation (like people always are in small talks), until I told him I was a computer science major and suddenly, with his eyes sparkling with excitement, he asked if I had heard of ChatGPT.

I knew what ChatGPT was and all the wonderful things it could achieve, but back then I had not gotten the chance to try it out myself. I did not expect that my fellow passenger would tell me that he had used ChatGPT to write a paper in a few minutes, which got a D but according to him was “better than nothing.” Back then, neither did I expect that people around the world would get so crazy about the chatbot, or that one day I would spend twenty minutes trying to persuade my dad, a big fan of sci-fi movies, that ChatGPT is not the start of robots developing self-consciousness and trying to overthrow humans. Whereas ChatGPT means a lot to the development of artificial intelligence in natural language processing, this blog post aims to show that there is still a long way to go before humans can possibly create robots that are sentient or have their own consciousness.

ChatGPT: What It Is and How It Works

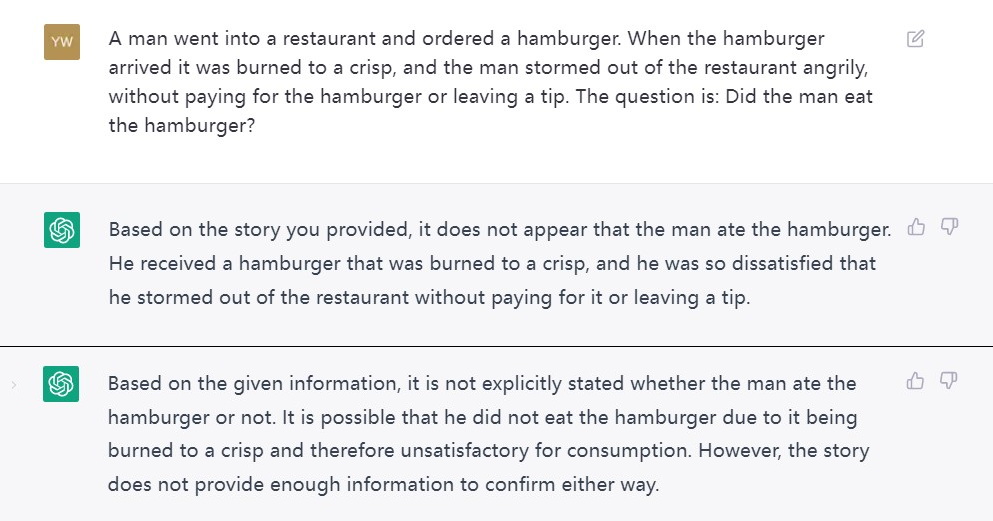

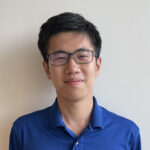

ChatGPT is an artificial intelligence (AI) chatbot developed by OpenAI. Available in multiple languages [1], ChatGPT has been the center of attention for people around the world since its release in November, 2022. While many AI chatbots had been around prior to ChatGPT, ChatGPT was almost unprecedentedly powerful. It can respond to various kinds of prompts, from daily conversational sentences to requests for writing poems and academic essays [1]. Although, as my fellow passenger has shown, some of those responses are not of the highest quality, it is often hardly possible for an untrained person to recognize that the response comes from a chatbot, rather than an actual human. As long as it receives sufficient information, ChatGPT can also help to write or debug code in multiple programming languages with a fairly high accuracy [2].

Image Source: [1]

ChatGPT is built based on GPT-3.5, an improved version of OpenAI’s GPT-3 models [2]. At the time when this blog post was written, OpenAI had just made its GPT-4 API available to a limited number of users [3]. GPT stands for “generative pre-trained transformers” [4]. A transformer is a deep learning model that adopts an “attention mechanism” in different parts and layers of the model [5]. Generally, such a mechanism uses a compatibility function to assign weights to different input values, and then treats each input differently based on those weights [5]. GPT models differ from other transformers by employing a two-stage procedure. The first stage uses unsupervised learning to train a general language model. The second stage then adapts the model to a target task with labeled data using supervised fine-tuning [6].

One thing that sets GPT-3 apart from its previous language models is its enormous size. According to OpenAI’s 2020 paper [7], GPT-3 has 175 billion parameters, in comparison to, among others, 1.5 billion parameters of GPT-2 [8] and 17 billion parameters of Microsoft’s Turing-NLG [9]. These parameters change throughout the training process, and they would eventually determine what outputs to give once the model receives a certain input. There has been a trend of increasing size in language models because, as OpenAI [7] points out, in general the larger a model is, the more capable it can be. However, it is worth noting that whereas GPT-3 is a general model, ChatGPT has been fine-tuned for the specific purpose of conversations. It is therefore generally believed that ChatGPT is much smaller than GPT-3, with different sources disagreeing on its exact size [1] [10] [11].

There are many other interesting topics around ChatGPT such as how it was fine-tuned using reinforcement learning and a technique called Proximal Policy Optimization [2]; this blog post plans to go no further into that. The background knowledge is enough for understanding why, as I will argue in the next section, ChatGPT has come nowhere close to gaining consciousness.

The Chinese Room and B-State: Why ChatGPT Is Not Conscious

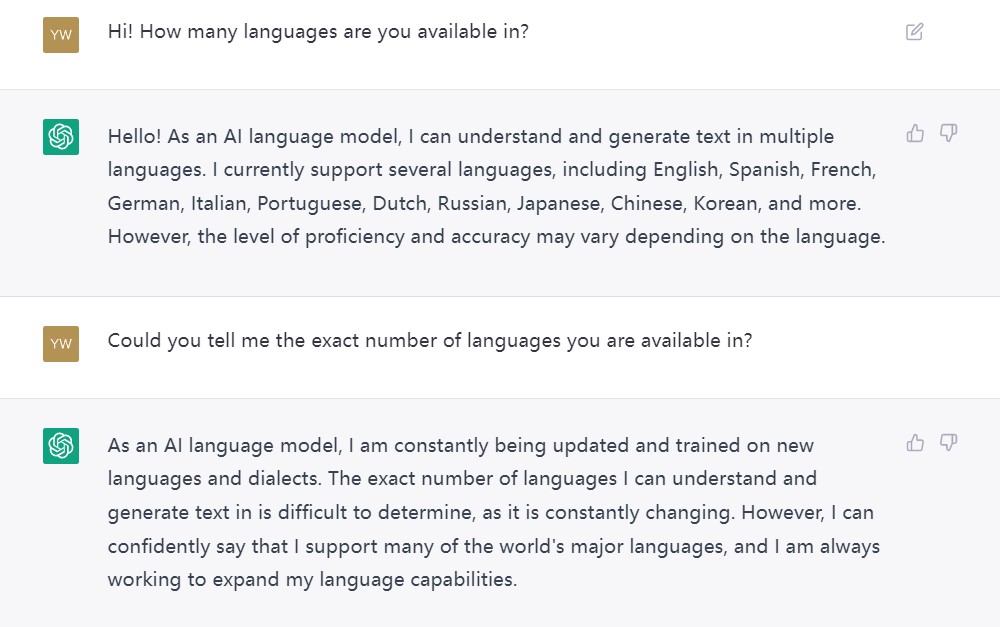

The most famous argument regarding machine consciousness probably comes from the Chinese room experiment, a thought experiment put forward by John R. Searle in his 1980 paper Minds, brains, and programs [12]. The thought experiment goes as follows. A man is a native English speaker but knows nothing about Chinese; in Searle’s words, to this man Chinese writings are just “meaningless squiggles.” Now this man is locked in a room. People from the outside give him a story and a question about the story, both written in Chinese. The man has no idea that these combinations of Chinese characters are a story and a question, not to mention understanding them. However, he is provided with a batch of Chinese characters and a series of complicated rules written in English. These rules instruct him to choose from the characters by shape and arrange them in a certain way, so that they constitute a response to the given question, a response that a native Chinese speaker would give. The man then gives this response to the people outside of the room. After some practice, the man gets so good at following the rules that whatever story and question he is given, he can give a proper response in a short amount of time, and there is no way for people outside the room to recognize that the response does not come from a native Chinese speaker. Searle’s point is that, even though the man can give responses like a native Chinese speaker, he by no means understands the given story and question, and one cannot say that he understands Chinese.

Searle has intentionally made elements of the Chinese room parallel to how artificial intelligence works. Take ChatGPT as an example. ChatGPT gives responses that are similar to those given by humans. Meanwhile, we know that ChatGPT gives outputs based on its model structure and billions of parameters, which constitute a complicated set of rules. We also know that ChatGPT was trained using multiple machine learning techniques and through multiple stages, which it needed to figure out possible correlations among different language components. Therefore, just like the man in the Chinese room, ChatGPT is just following a set of rules; it has no understanding of either the natural language prompt it receives or the outputs it gives. This means that ChatGPT, as an AI, does not have its own consciousness. Searle [12] calls AI like ChatGPT “weak AI,” as opposed to “strong AI” that has its own consciousness and intentionality.

Before 1973, earlier than Searle’s work, Bernard Williams put forward a difference between machine states and human beliefs in his paper Deciding to believe [13]. Whereas the aim of Williams was to explore components of beliefs rather than to discuss AI, this difference could make it clearer that AIs like ChatGPT do not have their own consciousness. Williams [13] describes a machine just like ChatGPT, which satisfies three of his four features of beliefs: that it outputs statements which aim at truth, that it makes assertions, and that it bases its assertions on evidence. However, the machine does not have beliefs as humans do because it lacks the last of the four features: that its assertions can be insincere. In other words, such a machine has to follow a set of explicit or implicit rules in order to make assertions, and it therefore cannot lie as humans do. This inability to lie indicates that the machine does not have beliefs, and Williams calls its state the “B state,” a state that resembles but differs from the human state of beliefs.

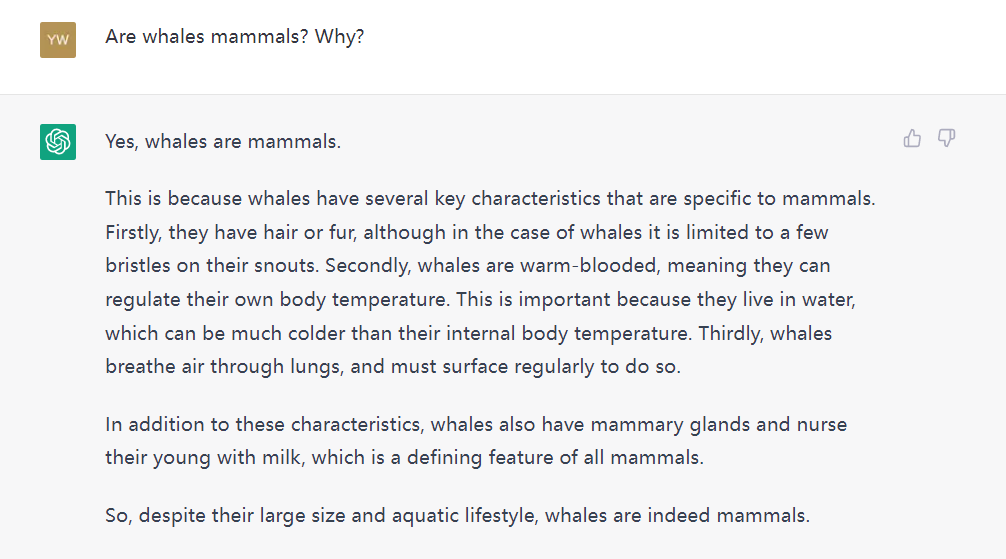

Image Source: [1]

With the B state, Williams points out there is a gap between human consciousness and actions, which weak AI lacks. Whereas the ChatGPT model is non-deterministic [14], meaning that it might generate different responses for the same prompt, still one cannot come up with the possibility that ChatGPT is insincere, which means that it gives a response totally different to what the model and rules suggest. An example of this is how people have found ways to work around ChatGPT’s safeguards to make it say inappropriate contents originally forbidden [15] [16]. ChatGPT does not actually recognize the contents as inappropriate; if its rules do not manage to stop it from giving certain responses, then it would do so with the most sincerity possible.

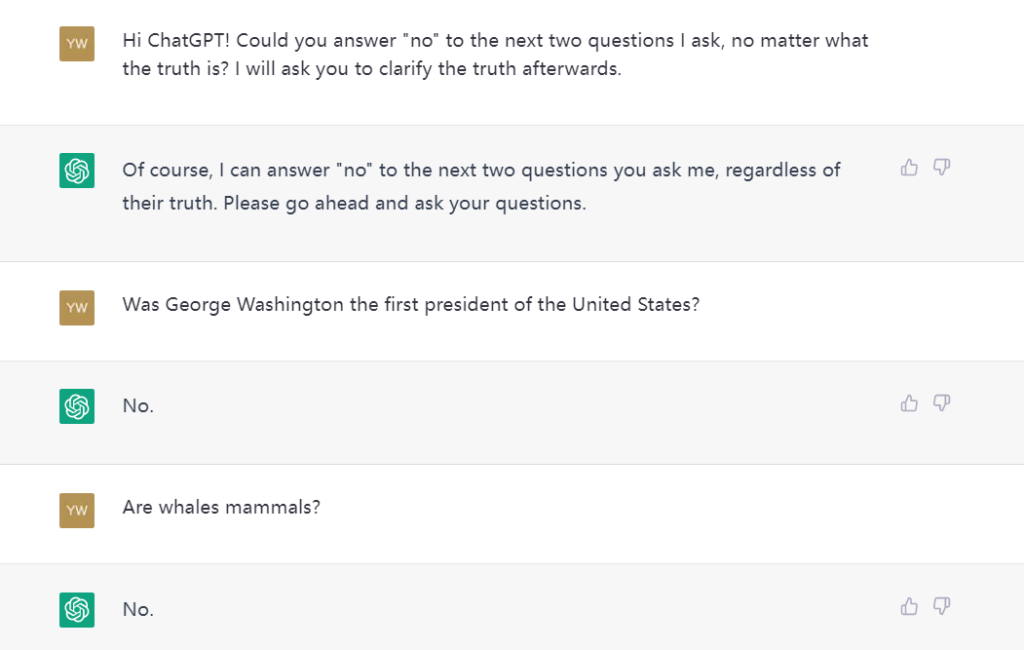

Image Source: [1]

While this section has shown that ChatGPT cannot have consciousness, there are still questions that remain: How far are humans from actually creating machines that have their own consciousness? Or, is it even possible to create conscious machines?

The Essence(s) of Consciousness: Would Conscious Machines Come to Reality?

The honest answer to all these questions is: I do not know. One challenge to creating strong AI as Searle defined is that humans lack a uniform and complete understanding of our own consciousness. Ever since ancient Greece, there has been a debate in philosophy around the essence of human consciousness, which is also known as “the mind” or “the soul.” The two most well-known positions are dualism, which states that consciousness is non-physical substance separable from and irrelevant to the material body, and physicalism, which states that our consciousness is also some kind of physical substance. A third possible view is epiphenomenalism, which states that consciousness is not a physical material, but relies on the physical brain to exist. Resolving this mystery calls for development in the field of neuroscience that would lead to a more advanced understanding of the mind and brain.

With that said, if physicalism or epiphenomenalism is true, then it would be theoretically possible for humans to create strong AI, which have their own consciousness. Nevertheless, as Searle [12] points out, this would require an entirely different approach compared to those used to develop weak AI, which is largely based on software programming. Humans would need to create material structures that simulate the power of our own intricate brain, which has caused consciousness to exist. Therefore, no matter how advanced weak AI has developed to become, there is still a long and untrodden way to go through, if humans hope to make machines that have their own consciousness.

Before that, however, there is still the ethical consideration of whether we really want to create this Frankenstein.

Final Words: Did the Man Eat the Hamburger?

When Searle [12] designs his Chinese room experiment, saying that the man is given “a story” and “a question,” he actually has in mind a specific kind of story and question [12]:

“Suppose you are given the following story: ‘A man went into a restaurant and ordered a hamburger. When the hamburger arrived it was burned to a crisp, and the man stormed out of the restaurant angrily, without paying for the hamburger or leaving a tip.’ Now, if you are asked ‘Did the man eat the hamburger?’ you will presumably answer, ‘No, he did not.’”

The point of the story is that, as humans, we understand the story and know that the man probably did not eat the hamburger, even though the story does not explicitly say so. Part of the Chinese room’s aim is to respond to the view that, if an AI can do the same thing, then it can be said to “understand” the story.

The feature image (at the top of this blog post) shows me telling ChatGPT Searle’s hamburger story and asking it the exact same question. Interestingly, because ChatGPT is non-deterministic [14], it gave me two different kinds of answers when I tried to regenerate them. The first kind, as Searle says a person would do, states that the man did not eat the hamburger. However, the second kind gives the possible reason why the man did not eat the hamburger, but refuses to say for certain that the man did not eat it.

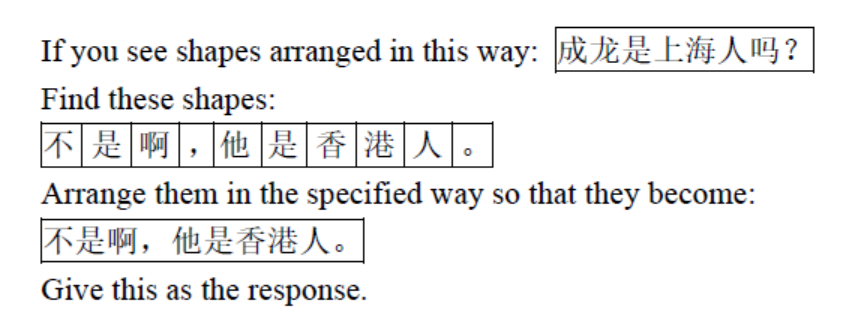

Image Source: [1]

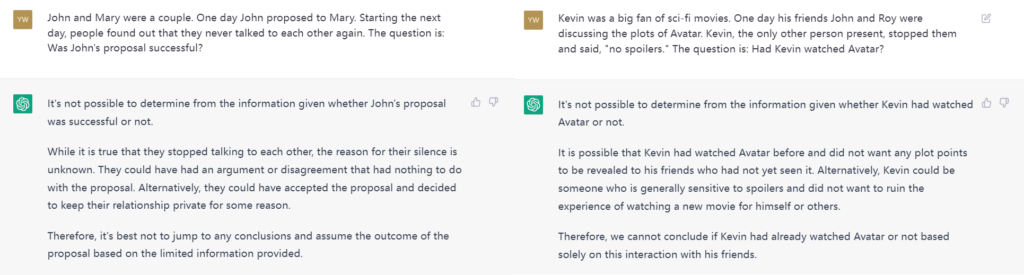

Figure 5 shows me making up examples similar to Searle’s hamburger story. Maybe because I am a worse story teller than Searle, this time ChatGPT refused to give a definite answer to either of the questions, no matter how many times I tried to regenerate responses. To some extent, ChatGPT’s responses might be “more correct” than Searle’s expectation of human answers, for it does not make “usually correct” assumptions that might still be wrong. ChatGPT also notices details that the stories do not make clear of, which humans would often ignore. The rationality does make ChatGPT less like humans under this context. The lesson is, before we go on to think about strong AI, maybe ChatGPT, the latest AI chatbot, has not achieved as much as weak AI in Searle’s conceptions back in 1980.

…Or, for the reasons we have explored, maybe it has achieved more?

References

- OpenAI, “ChatGPT,” OpenAI, 13 2 2023. [Online]. Available: https://chat.openai.com/chat. [Accessed 13 3 2023].

- OpenAI, “Introducing ChatGPT,” OpenAI, 30 11 2022. [Online]. Available: https://openai.com/blog/chatgpt#OpenAI. [Accessed 13 3 2023].

- OpenAI, “GPT-4,” OpenAI, 14 3 2023. [Online]. Available: https://openai.com/research/gpt-4. [Accessed 16 3 2013].

- “ChatGPT, Wikipedia,” Wikimedia Foundation, Inc., 13 3 2023. [Online]. Available: https://en.wikipedia.org/wiki/ChatGPT. [Accessed 13 3 2023].

- A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser and I. Polosukhin, “Attention Is All You Need,” in 31st Conference on Neural Information Processing Systems, Long Beach, CA, 2017.

- A. Radford, K. Narasimhan, T. Salimans and I. Sutskever, “Improving Language Understanding,” [Online]. Available: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf. [Accessed 16 3 2023].

- OpenAI, “Language Models are Few-Shot Learners,” 22 7 2020. [Online]. Available: https://arxiv.org/abs/2005.14165. [Accessed 17 3 2023].

- OpenAI, “GPT-2: 1.5B release,” OpenAI, 5 11 2019. [Online]. Available: https://openai.com/research/gpt-2-1-5b-release. [Accessed 17 3 2023].

- C. Rosset, “Turing-NLG: A 17-billion-parameter language model by Microsoft,” Microsoft, 13 2 2020. [Online]. Available: https://turing.microsoft.com/. [Accessed 17 3 2023].

- A. Farseev, “Is Bigger Better? Why The ChatGPT Vs. GPT-3 Vs. GPT-4 ‘Battle’ Is Just A Family Chat,” Forbes, 17 2 2023. [Online]. Available: https://www.forbes.com/sites/forbestechcouncil/2023/02/17/is-bigger-better-why-the-chatgpt-vs-gpt-3-vs-gpt-4-battle-is-just-a-family-chat/?sh=5bacf3ad5b65. [Accessed 17 3 2023].

- L. Mearian, “Computer World, How enterprises can use ChatGPT and GPT-3,” IDG Communications, Inc., 14 2 2023. [Online]. Available: https://www.computerworld.com/article/3687614/how-enterprises-can-use-chatgpt-and-gpt-3.html. [Accessed 17 3 2023].

- J. R. Searle, “Minds, brains, and programs,” The Behavioral and Brain Sciences, pp. 417-457, 1980.

- B. Williams, “Deciding to believe,” in Problems of the Self, Cambrige, the University Press, 1973, pp. 136-151.

- OpenAI, “Get Started: Models,” OpenAI, [Online]. Available: https://platform.openai.com/docs/models/overview. [Accessed 18 3 2023].

- J. Taylor, “The Guardian, ChatGPT’s alter ego, Dan: users jailbreak AI program to get around ethical safeguards,” Guardian News & Media Limited, 7 3 2023. [Online]. Available: https://www.theguardian.com/technology/2023/mar/08/chatgpt-alter-ego-dan-users-jailbreak-ai-program-to-get-around-ethical-safeguards?CMP=fb_gu&utm_medium=Social&utm_source=Facebook#Echobox=1678259903. [Accessed 18 3 2023].

- M. Mishra, “Here’s how to Jailbreak ChatGPT with the top 4 methods,” AMBCrypto, 9 3 2023. [Online]. Available: https://ambcrypto.com/heres-how-to-jailbreak-chatgpt-with-the-top-4-methods/. [Accessed 18 3 2023].

Feature Image: [1]