Like many people, my first introduction to GPUs had nothing to do with computer science. I wanted a personal computer (PC) for gaming and had decided the best way to maximize my money was to build one myself. Queue several weeks trolling the internet for reliable sources on building my PC, then finding the parts that I would need and looking for the best deals, and finally, I had a computer of my own. Little did I know that I would soon be using the Graphics Processing Unit (GPU) I had intended for rendering graphics to be used training AI models for an internship. As anyone who has built a PC for similar reasons can attest, a GPU is one of the most valuable parts of a PC. I spent most of my PC building time primarily concerned with which GPU I would put in my system. It’s important to know what value a GPU provides, so this blog post aims to cover the what, why, and how of GPUs.

What is a GPU?

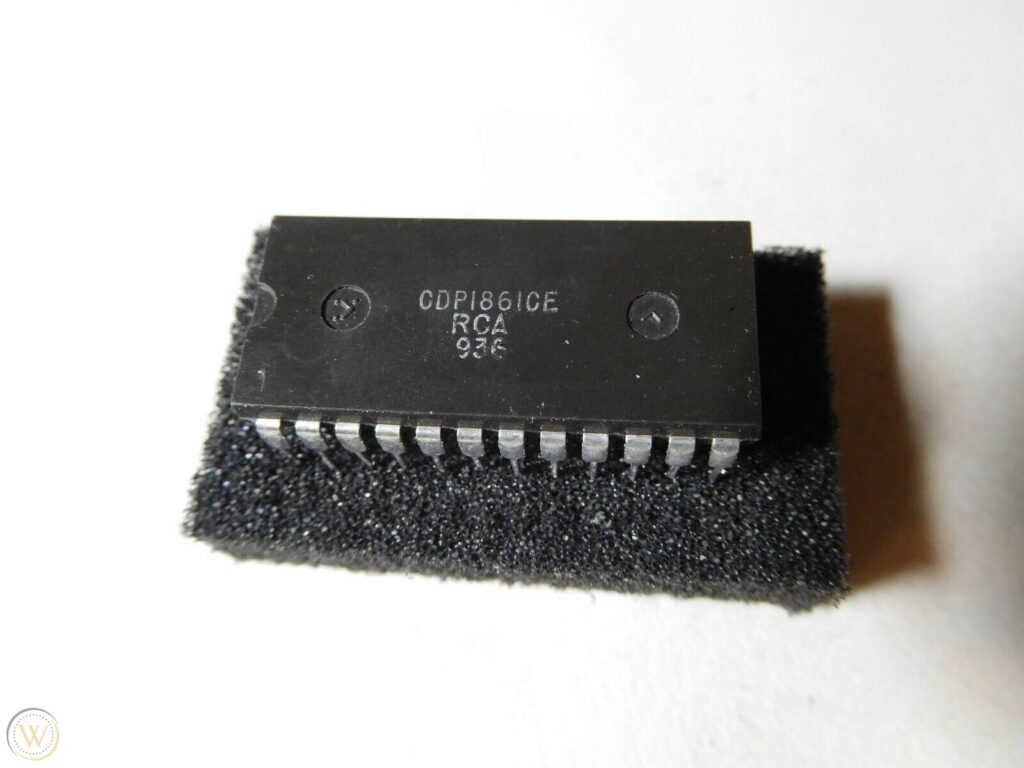

Before diving into what a GPU is, knowing its history will be helpful. The term GPU is relatively recent, just a couple of decades old [1]. However, its lineage traces back to the 1970s. The first attempts at integrating graphics-focused hardware with a CPU were modular video adapters [2]. The latter half of the decade saw the invention of the “Pixie” CDP1861 video chip from RCA, with a 62 by 128 resolution. For reference, that is almost 20 times fewer pixels than 360p. Other entries include the Television Interface Adapter used in the Atari 2600 and the MC6845 video address generator from Motorola, which IBM PCs would use in the early 80s. These were all undoubtedly graphics acceleration devices in the same vein as what modern GPUs do, but they were still a long way from what exists today.

Rapid innovation took place over the next decades as video processing options improved. Graphics slowly advanced from the Pixie’s modest 62×128 resolution to standard definition in the 480p range in the mid-1990s [2][3]. However, it wouldn’t be until the year 1999 that the first graphics card was officially called a GPU. Built by the rapidly rising company Nvidia, the first GPU was the GeForce 256, boasting 32MB dedicated memory and some HD support, capable of displaying in 720p and 768p [4]. Some 20 years later, the latest graphics cards released by Nvidia have had several generations of development and have significantly improved capabilities. Modern graphics cards run a dozen times faster and have orders of magnitude more processing power than the original GPU. An Nvidia RTX 4090 has 24GB of dedicated memory and runs resolutions up to 8k, about 35 times more pixels than 720p [5].

The GPUs we have now are immensely capable and highly modular processing units, able to plug into almost any motherboard with enough PCIe slots. It’s also important to note that what is available now is nowhere near the end of the road for this technology. Moore’s Law is a famous adage that states that the number of transistors on a computer chip will double every two years, which has more or less proven true since Gordon Moore first uttered it in the 1960s. In contrast, GPUs are currently doubling the performance of their predecessors every 12 to 18 months [1]. The incentive for improvement in graphics remains, as we can see in the relatively recent jumps from standard, to high, to ultra-high definition 4k and 8k resolutions. By all indications, GPUs will continue to become significantly more powerful.

However, this is not to say that GPUs are necessary for computers. Indeed, many prebuilt PCs forgo GPUs for integrated graphics processors. Apple takes this approach on their recent M1 chipset, which handles all computational processes on a single chip [6]. Both options have pros and cons, which can be briefly summarized as follows: integrated graphics lead to less performance at a lower cost, while a separate GPU gives more performance at a higher cost. Having integrated graphics is a tradeoff taken by many prebuilt desktops and laptops.

In summary, a GPU is a modular piece of computer hardware specifically designed to handle display output, that is, graphics.

A Foray into Computer Architecture

A modern computer usually has up to two processors to handle all its computations. These are the GPU and the CPU, or Central Processing Unit. A GPU differentiates itself from a CPU by being optimized for highly parallel processing of integer and floating-point computation scaled across multiple cores for high bandwidth [1]. What that mess of technical jargon boils down to is a GPU is specialized for producing display output and generating graphics. In contrast, a CPU must be able to perform every computation a computer might need without requiring additional hardware. Thus a CPU is a highly generalized processor.

But how does a GPU accomplish its task? A basic rendering process might include input processing, vertex shading, geometry shading, rasterization, pixel shading, and output processing steps. Sequentially, a GPU is loading and comparing information, building geometry, identifying significant features, rasterizing (turning information into pixels), then combining multiple layers of data into each pixel into a frame and sending it to output. Each of these operations can be, or in other cases require, encoding as matrices to perform their computations efficiently. GPU specialization and optimization have become so refined due to this commonality. In a nutshell, a GPU quickly and efficiently performs large numbers of matrix operations. For some context on the scale of what these processors do, a single 4k image has over 8 million pixels. Generating graphics at 60 frames per second means computing almost 500 million pixels per second, each pixel having multiple parameters determining its appearance. That is only the last step of the rendering process described above. After decades of improvements, the resulting processors have gotten quite good at generating graphics. At the same time, they are also the best option for other kinds of computing which require similar data processing.

What can we do with GPUs?

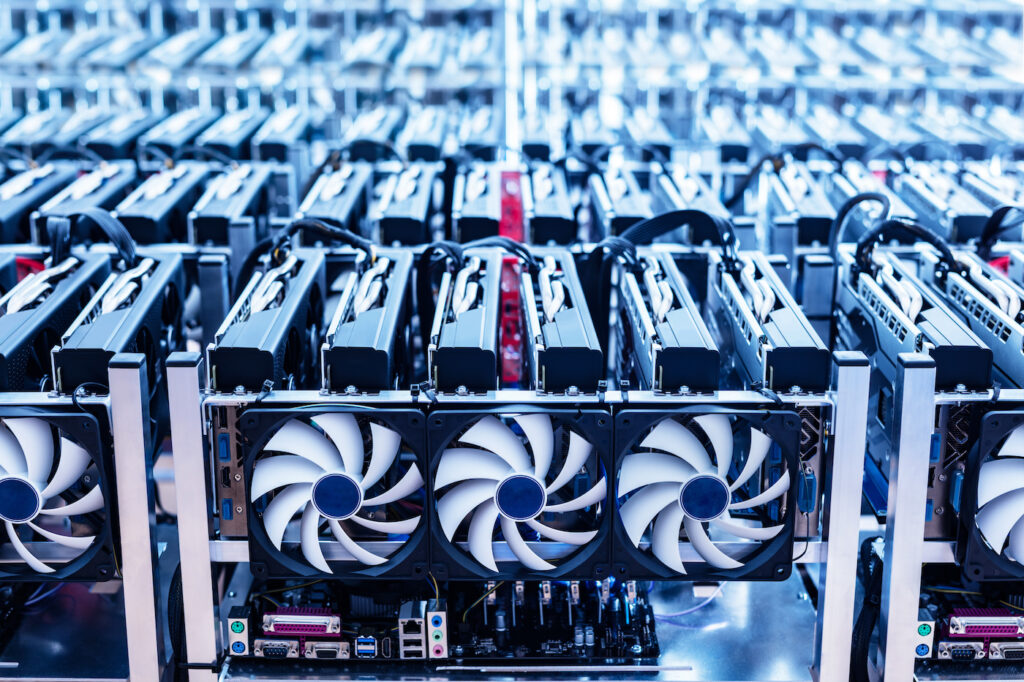

The original intent was for GPUs to generate computer graphics. However, they have many other applications, including crypto mining, simulation, AI, data science, High-Performance Computing (HPC), and data centers. You may be familiar with photos of crypto mining rigs with hundreds or thousands of GPUs linked in server racks, running 24/7 to mine crypto. This usage experienced a spike in popularity coinciding with the crypto boom, and some graphics cards were even optimized to perform mining computations over rendering graphics. However, recently some cryptocurrencies, such as Ethereum, switched away from a mining model, making crypto mining with GPUs less popular. As a result, there were bursts of used GPUs for sale as crypto miners unloaded their stockpiled GPUs.

Linking GPUs, similar to mining rigs, has other uses, as seen in HPC clusters. Typically owned by universities, companies, or other institutions with the necessary interest and funding, HPC clusters can have many individual CPUs and GPUs, allowing users to quickly perform tasks that might take days or months on a PC. Another use for GPUs is in Artificial Intelligence (AI). With hardware acceleration from a GPU, a PC can train a reasonably accurate neural network. Some simpler networks, such as those used in AI tutorials, can be trained on small datasets like the MNIST handwritten digits dataset in seconds and achieve accuracy in the upper 90 percent range. Neural networks are essentially implementations of linear optimization algorithms. Thus, training a neural network means performing many matrix operations, precisely what GPUs do best. An example that combines these two usages is the 2017 creation of AlphaZero: a deep and reinforcement learning neural network that convincingly defeated the best available chess engine after just nine hours of training on an HPC cluster[7].

Individuals without mining rigs or HPC clusters can still achieve accelerated computing speeds with a GPU. Companies like Nvidia and AMD offer tailored solutions for employing graphics cards in non-graphical computation tasks. Nvidia has one of the more developed GPU computing platforms, Compute Unified Device Architecture (CUDA). Other alternatives exist, such as OpenCL, which can link GPUs from different companies. The options available increase significantly once equipped with a graphics card and proper drivers. An Nvidia GPU with CUDA can run code directly, no longer limited to standard tasks like displaying video and rendering graphics. These tasks include some of the previously mentioned alternate uses of GPUs, like training and running neural networks quickly. Programs or packages that rely on different AI algorithms will also run faster, assuming they allow control over processor usage.

Summary

GPUs specialize in performing matrix operations, allowing them to generate graphics, process data, and run simulations. By linking large numbers of GPUs together, we can build mining rigs or HPC clusters to make intensive computations quickly. Having a GPU in a PC also allows for accelerated execution when using some applications and packages, not to mention better graphics performance.

References

- J. Nickolls and D. Kirk, “Graphics and Computing GPUs,” in Computer Organization and Design: MIPS Edition, 6th ed.Elsevier, 2021, p. C-1-C–83. [Online]. Available: https://www.elsevier.com/__data/assets/pdf_file/0010/1191376/Appendix-C.PDF

- “The History of the Modern Graphics Processor,” TechSpot, Dec. 01, 2022. https://www.techspot.com/article/650-history-of-the-gpu/ (accessed May 11, 2023).

- S. flat panel bundled with the R. IV-FP, “History of the Modern Graphics Processor, Part 2,” TechSpot, Dec. 04, 2020. https://www.techspot.com/article/653-history-of-the-gpu-part-2/ (accessed May 11, 2023).

- “NVIDIA GeForce 256 SDR Specs,” TechPowerUp, May 11, 2023. https://www.techpowerup.com/gpu-specs/geforce-256-sdr.c731 (accessed May 11, 2023).

- “NVIDIA GeForce RTX 4090 Specs,” TechPowerUp, May 11, 2023. https://www.techpowerup.com/gpu-specs/geforce-rtx-4090.c3889 (accessed May 11, 2023).

- “MacBook Air with M1 chip,” Apple. https://www.apple.com/macbook-air-m1/ (accessed May 11, 2023).

- D. Silver et al., “A general reinforcement learning algorithm that masters chess, shogi and Go through self-play,” Science, vol. 362, no. 6419, pp. 1140–1144, Dec. 2018, doi: 10.1126/science.aar6404.